Earn your degree in Internal Linking Surgery

Something I often notice when it comes to link building is how poor are internal linking strategies of most of the sites that go after external links. I mean, external links are definitely more powerful than internal ones, but completely forgetting of optimizing the latter equals missing a big zero cost opportunity. Then, an easy advice is: before spending hours and money chasing links from other sites, try add a few of them on your own domain.

It’s not rocket science and a number of posts about internal linking already exist out there.

But I’m the kind of guy that likes doing stuff within a strategy, and one can do better than putting links on their own pages wherever possible.

So today I’m showing how to:

- Quickly find the right pages in which adding an internal link;

- Fastly check if a link already exists in them;

- Easily define how to choose the most effective anchor text in each of them.

Are you interested? Let’s go.

Step 1: Scrape a list of URLs from your own database

The first task you have to face is choosing the pages in which you might add a link. This tipically means that, given you’ve a database and a target keyword, you want to search for the keyword in your DB and go modifying a few results (ideally the better ones) to insert the wanted link.

Very easy, but with a little problem: if you have tons of content, you’ll get a big number of pages to check and modify. And you don’t want to waste time with one by one manual checks.

So, let’s ask some help to our friendly tool Scraper for Chrome.

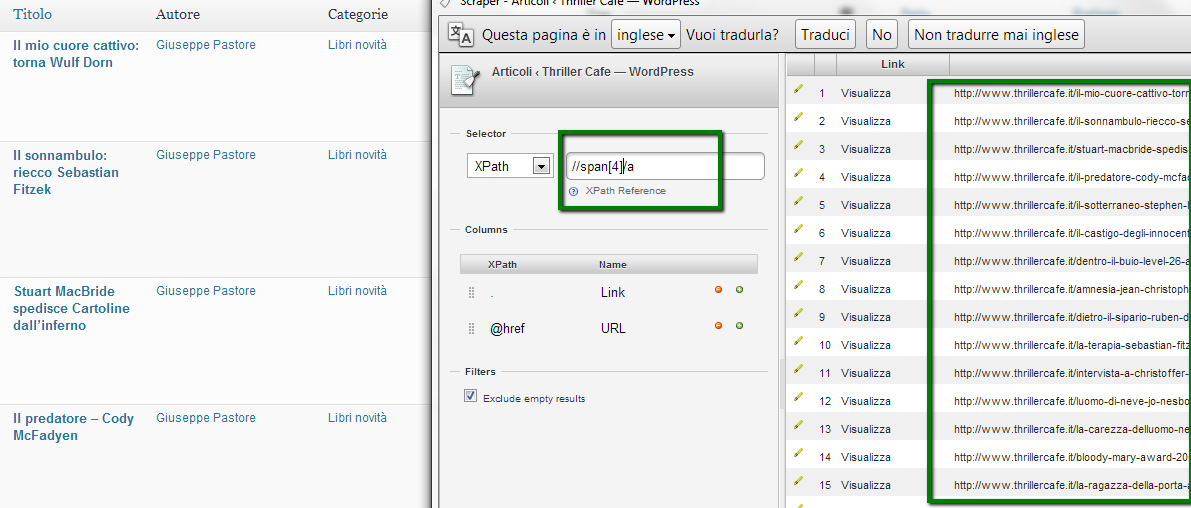

In the following screenshot, I’m searching for Psycho in my ThrillerCafe.it WordPress database (it’s a crime novels’ site) because I want to link the Robert Block’s novel page from others that cite the book (or the word psycho).

I’ve found 21 articles.

I right-clic on View and scrape the //span[4]/a Xpath, getting the all the URLs of the pages I have to check ready, to be exported in a spreadsheet.

Step 2: check if a link already exists

Now that we have a list of URLs, we have to separate the pages that already link to our target one, and those that aren’t.

Here, a couple immediate possibilities exist:

- I’ve 21 URLs to check and maybe I could afford browsing each of them, but with a bigger number of results this wouldn’t be possible and anyway I don’t like doing manual stuff.

- I might use a crawler such as Xenu or Screaming Frog to check the list and see which pages are linking to the target one.

Since I really don’t like the first way of facing the task I might go for the second one, but I’ve my URLs in an Excel format and for the third step I’ll be using Excel again, so at this point I prefer using our awesome friend SEO tools for Excel.

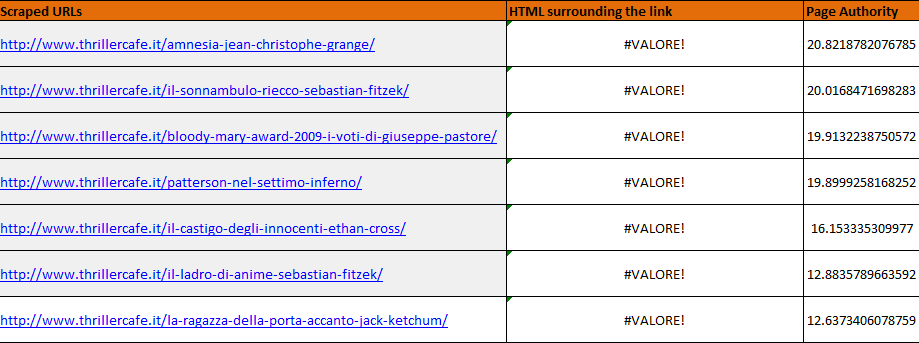

Having the scraped URLs in a spreadsheet (column A, starting in cell 8), I can use the RegexpFindOnUrl function to extract the HTML surrounding the link (pattern in D2), if it exists:

=RegexpFindOnUrl($A8;CONCATENATE("(.*)";$D$2;"(.*)"))

In my case I’ve only one page linking to my target (with a correct anchor text), and 20 ready to host a new link.

Step 3: add links, with the best anchor texts on the best pages

At this point, I might just start adding links to all the pages. The more, the better. No? Yes, and no. A more refined strategy would be varying anchor texts and using the better ones (exact match or partial match) on the stronger pages, while choosing a generic or maybe pure URL anchor text on the weaker ones. And with a great number of results I might also project multiple tiers of internal links.

Here I use Moz APIs to sort pages by Page Authority. In order to use them, you’ve to be registered to Moz (join here): as Page Authority is an information provided for free, you don’t need a paid plan.

Now I can add a column where, being Your Access ID in B2 and Your Secret Key in B3, I get

=JsonPathOnUrl("http://lsapi.seomoz.com/linkscape/url-metrics/"&A8&"?Cols=34359738368";

"$..upa";BuildHttpDownloaderConfig(TRUE;;;;;;$B$2;$B$3))

This means: “get the upa response field of the Json Moz provides using 34359738368 as bit flag” (check the Mozscape Wiki for more information).

In simpler words, it retrieves the Page Authority of my scraped URLs.

Sorting by decreasing PA I can decide to use exact anchor texts on the stronger pages, so maximizing the strenght of the link.

TL;DR

Internal links can be effective and using Excel and a few scraping functions you can determine the best pages to add links to your target one.

If you liked the post and would like to use the Excel file, you can download it here.

Any links and sharings would be appreciated. Thanks.

1 COMMENT

The link to the spreadsheet is broken. Can you re-post? I’m having a hard time following along with your example.

Thanks!